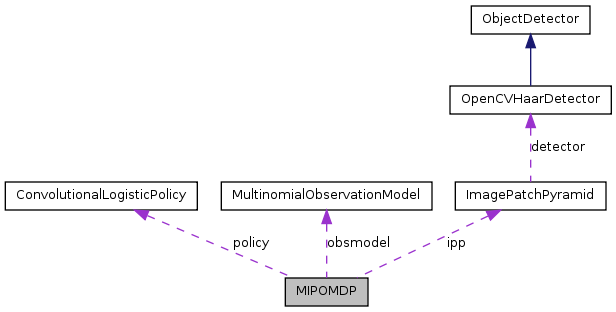

Machine Perception Primitive: An implementation of the "Multinomial IPOMDP" algorithm from Butko and Movellan, 2009 (see Related Publications).

More...

#include <MIPOMDP.h>

Inherited by MOMIPOMDP.

Public Member Functions | |

| MIPOMDP (CvSize inputImageSize, CvSize subImageSize, CvSize gridSize, int numSubImages, CvMat *subImageGridPoints, const char *haarDetectorXMLFile) | |

| Main Constructor: Manually create an MIPOMDP. | |

| MIPOMDP () | |

| Default Constructor: Create an MIPOMDP with the same properties as the MIPOMDP used in Butko and Movellan, CVPR 2009 (see Related Publications). NOTE: This requires "data/haarcascade_frontalface_alt2.xml" to be a haar-detector file that exists. | |

| virtual | ~MIPOMDP () |

| Default Destructor: Deallocates all memory associated with the MIPOMDP. | |

| void | saveToFile (const char *filename) |

| Save MIPOMDP Data: Save data about the structure of the MIPOMDP so that it can be persist past the current run of the program. This includes details about the structure of the IPP, the object detector used, the parameters of the multionmial observation model, the policy used. It does not include details about the state of the MIPOMDP (the current location of the target), but rather the properties of the model. | |

| void | resetPrior () |

| Interface with MIPOMDP: Reset the belief about the location of the object to be uniform over space (complete uncertainty). | |

| void | setTargetCanMove (int flag) |

| Interface with MIPOMDP: Tell MIPOMDP whether there is a possiblity that the target can move. When searching a static frame, set this to 0. When searching sequential frames of a movie, set this to 1. | |

| int | getTargetCanMove () |

| Interface with MIPOMDP: Figure out whether the MIPOMDP expects that the target can move. | |

| CvSize | getGridSize () |

| Interface with MIPOMDP: Find the shape of the grid that forms the basis for the MIPOMDP state space and action space. | |

| CvPoint | recommendSearchPointForCurrentBelief () |

| Interface with MIPOMDP State: Given the current point, where does the MIPOMDP's Convolutional logistic policy recommend looking? Since the CLP (usually) gives stochastic output, this will not always return the same result, even given the same belief state. | |

| double | getProb () |

| Interface with MIPOMDP State: Get an estimate of the probability that the MIPOMDP knows the exactly correct location of the target. | |

| double | getReward () |

| Interface with MIPOMDP State: The certainty (information-reward) about the location of the target, i.e. the mutual information (minus a constant) between all previous actions/observations and the target location. | |

| CvPoint | getMostLikelyTargetLocation () |

| Interface with MIPOMDP State: Find the grid-cell that is most likely to be the location of the target. | |

| CvPoint | searchNewFrame (IplImage *grayFrame) |

| Search function: Search an image (or frame of video) at a location recommended by the Convolutional Logistic Policy, and update belief based on what was found. | |

| CvPoint | searchNewFrameAtGridPoint (IplImage *grayFrame, CvPoint searchPoint) |

| Search function: Search an image (or frame of video) at a location decided by the calling program. and update belief based on what was found. | |

| virtual CvPoint | searchFrameAtGridPoint (IplImage *grayFrame, CvPoint searchPoint) |

| Search function: Search an image (or frame of video) at a location recommended by the calling program, and update belief based on what was found. | |

| CvPoint | searchFrameUntilConfident (IplImage *grayFrame, double confidenceThresh) |

| Search function: Search an image (or frame of video) at a sequence of fixation points recommended by the CLP, and update belief based on what was found. Employs an early-stop criterion of the first repeat fixation. Otherwise, stops at when confidence in the target location reaches a maximum value. | |

| CvPoint | searchFrameRandomlyUntilConfident (IplImage *grayFrame, double confidenceThresh) |

| Search function: Search an image (or frame of video) at a sequence of fixation points chosen randomly, and update belief based on what was found. Employs an early-stop criterion of the first repeat fixation. Otherwise, stops at when confidence in the target location reaches a maximum value. | |

| CvPoint | searchFrameForNFixations (IplImage *grayFrame, int numfixations) |

| Search function: Search an image (or frame of video) at a sequence of fixation points recommended by the CLP, and update belief based on what was found. Fixates the specified number of times (no early stopping is used). | |

| CvPoint | searchFrameForNFixationsOpenLoop (IplImage *grayFrame, int numfixations, int OLPolicyType) |

| Search function: Search an image (or frame of video) at a sequence of fixation points recommended by an open loop fixation policy, and update belief based on what was found. Fixates the specified number of times (no early stopping is used). | |

| CvPoint | searchFrameForNFixationsAndVisualize (IplImage *grayFrame, int numfixations, const char *window, int msec_wait) |

| Search function: Search an image (or frame of video) at a sequence of fixation points recommended by the CLP, and update belief based on what was found. Fixates the specified number of times (no early stopping is used). Visualize the process in a provided OpenCV UI Window, at a specified frame rate. | |

| CvPoint | searchFrameAtAllGridPoints (IplImage *grayFrame) |

| Search function: Search an image (or frame of video) at every grid-point (no early stopping is used). | |

| CvPoint | searchHighResImage (IplImage *grayFrame) |

| Search function: Apply the Object Detector to the entire input image. | |

| void | changeInputImageSize (CvSize newInputSize) |

| Interface with IPP: Change the size of the input image and the downsampled image patches. Omitting a newSubImageSize causes the smallest-used-scale to have a 1-1 pixel mapping with the downsampled image patch -- i.e. information is not lost in the smallest scale. | |

| void | changeInputImageSize (CvSize newInputSize, CvSize newSubImageSize) |

| Interface with IPP: Change the size of the input image and the downsampled image patches. | |

| int | getNumScales () |

| Interface with IPP: The total number of levels that the IP Pyramid has. | |

| CvPoint | gridPointForPixel (CvPoint pixel) |

| Interface with IPP: Map a pixel location in the original image into a grid-cell. | |

| CvPoint | pixelForGridPoint (CvPoint gridPoint) |

| Interface with IPP: Find the pixel in the original image that is in the center of a grid-cell. | |

| void | setGeneratePreview (int flag) |

| Interface with IPP: Turns on/off the code that modifies foveaRepresentation to visualize the process of fixating. | |

| void | setMinSize (CvSize minsize) |

| Interface with IPP: Set the minimum allowed subImageSize. | |

| void | useSameFrameOptimizations (int flag) |

| Interface with IPP: Set whether same-frame optimizations are being used. | |

| void | saveVisualization (IplImage *grayFrame, CvPoint searchPoint, const char *base_filename) |

| Interface with IPP: Save a variety of visual representations of the process of fixating with an IPP to image files. | |

| IplImage * | getCounts () |

| Interface with IPP: Get the count of faces found in each grid cell after the last search call. | |

| void | setHaarCascadeScaleFactor (double factor) |

| Interface with Object Detector: Sets the factor by which the image-patch-search-scale is increased. Should be greater than 1. By default, the scale factor is 1.1, meaning that faces are searched for at sizes that increase by 10%. | |

| void | setHaarCascadeMinSize (int size) |

| Interface with Object Detector: Sets the minimum patch size at which the classifier searches for the object. By default, this is 0, meaning that the smallest size appropriate to XML file is used. In the case of the frontal face detector provided, this happens to be 20x20 pixels. | |

| void | trainObservationModel (ImageDataSet *trainingSet) |

| Interface with Observation Model: Fit a multinomial observation model by recording the object detector output at different fixation points, given known face locations contained in an ImageDataSet. | |

| void | addDataToObservationModel (ImageDataSet *trainingSet) |

| Interface with Observation Model: Add data to an existing multinomial observation model by recording the object detector output at different fixation points, given known face locations contained in an ImageDataSet. | |

| void | resetModel () |

| Interface with Observation Model: Reset the experience-counts used to estimate the multinomial parameters. Sets all counts to 1. | |

| void | combineModels (MIPOMDP *otherPomdp) |

| Interface with Observation Model: Combine the evidence from two MIPOMDPs' multinomial observation models (merge their counts and subtract off the extra priors). | |

| void | setPolicy (int policyNumber) |

| Interface with CLP: Tell the CLP what kind of convolution policy to use in the future. | |

| void | setHeuristicPolicyParameters (double softmaxGain, double boxSize) |

| Interface with CLP: Tell the CLP the shape of the convolution kernel to use. | |

| void | setObjectDetectorSource (std::string newFileName) |

| Change the file used by the object detector for doing detecting. This is critical if a weights file is located at an absolute path that may have changed from training time. | |

Static Public Member Functions | |

| static MIPOMDP * | loadFromFile (const char *filename) |

| Load a saved MIPOMDP from a file. The file should have been saved via the saveToFile() method. | |

Public Attributes | |

| IplImage * | currentBelief |

| Interface with MIPOMDP State: Access the current belief distribution directly. Changes each time one of the search functions is called. | |

| IplImage * | foveaRepresentation |

| Interface with MIPOMDP State: A visually informative representation of the IPP Foveal represention. | |

Detailed Description

Machine Perception Primitive: An implementation of the "Multinomial IPOMDP" algorithm from Butko and Movellan, 2009 (see Related Publications).

- Date:

- 2010

- Version:

- 0.4

Constructor & Destructor Documentation

| MIPOMDP::MIPOMDP | ( | CvSize | inputImageSize, |

| CvSize | subImageSize, | ||

| CvSize | gridSize, | ||

| int | numSubImages, | ||

| CvMat * | subImageGridPoints, | ||

| const char * | haarDetectorXMLFile | ||

| ) |

Main Constructor: Manually create an MIPOMDP.

An MIPOMDP needs to know how big the images it receives are, to what smaller size it should scale each image patch, the size (height / width) of the grid-cell tiling of the image, how many image patches there will be, the size (height/width) in grid-cells of each image patch, and what object detector to apply.

In order to be able to properly save and load object detectors, the class of the object detector must be specified in the MIPOMDP. To use an object detector other than OpenCVHaarDetector, replace "OpenCVHaarDetector" in MIPOMDP.h and MIPOMDP.cpp, and recompile the NMPT library. (setHaarCascadeScaleFactor() and setHaarCascadeMinSize() will also need to be removed, or implemented in your own object detector for successful compilation).

- Parameters:

-

inputImageSize The size of the images that will be given to the IPP to turn into MIPOMDP observations. This allocates memory for underlying data, but it can be changed easily later if needed without recreating the object by calling the changeInputSize() functions. subImageSize The common size to which all image patches will be reduced, creating the foveation effect. The smaller subImageSize is, the faster search is, and the more extreme the effect of foveation. This allocates memory for underlying data, but it can be changed easily later if needed without recreating the object by calling the changeInputSize() functions. gridSize Size of the discretization of the image. The number of POMDP states is the product of the demensions of this size (e.g. 21x21). numSubImages Number of Patches in the Image Patch Pyramid. subImageGridPoints A matrix that describes the size and shape of each level (patch) of the IP Pyramid. This must be a matrix with size [numSubImages x 2]. Each row contains the width and height of the corresponding levels. These should be in order of *decreasing* size, so that the largest Image Patch is first. For example, in Butko and Movellan CVPR 2009, we used [21 21; 15 15; 9 9; 3 3]. Finaly, note that it is not necessary that the largest patch cover the entire image. However, when the largest patch is the same size as grid-cell-matrix, special optimizations become available that reduce the complexity of the algorithm when the same image, or same frame of video, is fixated multiple times. haarDetectorXMLFile A file that was saved as the result of using OpenCV's haar-detector training facilities. In order to be able to properly save and load object detectors, the class of the object detector must be specified in the MIPOMDP. To use an object detector other than OpenCVHaarDetector, simply replace "OpenCVHaarDetector" in MIPOMDP.h and MIPOMDP.cpp, and recompile the NMPT library.

Member Function Documentation

| void MIPOMDP::addDataToObservationModel | ( | ImageDataSet * | trainingSet ) |

Interface with Observation Model: Add data to an existing multinomial observation model by recording the object detector output at different fixation points, given known face locations contained in an ImageDataSet.

Does not reset the current observation model to a uniform prior before tabulating the data.

- Parameters:

-

trainingSet An iamge dataset, consisting of image files and labeled object locations. The first two indices (0, 1) of all labels in the trainingSet should be the width and height (respectively) of the object's center in the image.

| void MIPOMDP::changeInputImageSize | ( | CvSize | newInputSize ) |

Interface with IPP: Change the size of the input image and the downsampled image patches. Omitting a newSubImageSize causes the smallest-used-scale to have a 1-1 pixel mapping with the downsampled image patch -- i.e. information is not lost in the smallest scale.

- Parameters:

-

newInputSize The size of the next image that will be searched.

| void MIPOMDP::changeInputImageSize | ( | CvSize | newInputSize, |

| CvSize | newSubImageSize | ||

| ) |

Interface with IPP: Change the size of the input image and the downsampled image patches.

- Parameters:

-

newInputSize The size of the next image that will be searched. newSubImageSize The desired size of the downsampled image patches. If the subImageSize is too small (below getMinSize()), the smallest scale is dropped and subImageSize is scaled up proportionally to the next scale. This process is repeated until subImageSIze is greater than getMinSize(). By default, minSize is 60x40.

| void MIPOMDP::combineModels | ( | MIPOMDP * | otherPomdp ) |

Interface with Observation Model: Combine the evidence from two MIPOMDPs' multinomial observation models (merge their counts and subtract off the extra priors).

- Parameters:

-

otherPomdp A second MIPOMDP with a model that has been fit to different data than this one: We can estimate the model for the combined set of data by simply adding the counts, and subtracting duplicate priors. In this way, we can efficiently compose models fit to different subsets of a larger dataset (e.g. for cross-validation).

| CvSize MIPOMDP::getGridSize | ( | ) |

| CvPoint MIPOMDP::getMostLikelyTargetLocation | ( | ) |

Interface with MIPOMDP State: Find the grid-cell that is most likely to be the location of the target.

- Returns:

- The grid-cell that is most likely to be the location of the target.

| int MIPOMDP::getNumScales | ( | ) |

Interface with IPP: The total number of levels that the IP Pyramid has.

Note that this may be different from the number of scales that the IP Pyramid is using. If the subImageSize is too small (below getMinSize()), the smallest scale is dropped and subImageSize is scaled up proportionally to the next scale. This process is repeated until subImageSIze is greater than getMinSize(). To find out how many scales that the IPP is using, call getUsedScales().

| double MIPOMDP::getProb | ( | ) |

Interface with MIPOMDP State: Get an estimate of the probability that the MIPOMDP knows the exactly correct location of the target.

I.e. what is the probability of being correct given the (MxN) alternative forced choice task, "Where is the target" (where M is the width of the grid, and N is the height).

- Returns:

- The probability that the MIPOMDP knows the exactly correct location of the target.

| double MIPOMDP::getReward | ( | ) |

Interface with MIPOMDP State: The certainty (information-reward) about the location of the target, i.e. the mutual information (minus a constant) between all previous actions/observations and the target location.

- Returns:

- The information-reward (Sum p*log(p)) of the current belief state. Has range -(1/MN)log(MN):0 (where M is the width of the grid, and N is the height).

| int MIPOMDP::getTargetCanMove | ( | ) |

| MIPOMDP * MIPOMDP::loadFromFile | ( | const char * | filename ) | [static] |

Load a saved MIPOMDP from a file. The file should have been saved via the saveToFile() method.

- Parameters:

-

filename The name of a file that was saved via the saveToFile() method.

| CvPoint MIPOMDP::recommendSearchPointForCurrentBelief | ( | ) |

Interface with MIPOMDP State: Given the current point, where does the MIPOMDP's Convolutional logistic policy recommend looking? Since the CLP (usually) gives stochastic output, this will not always return the same result, even given the same belief state.

- Returns:

- A grid-cell point that should be good to fixate.

| void MIPOMDP::resetModel | ( | ) |

Interface with Observation Model: Reset the experience-counts used to estimate the multinomial parameters. Sets all counts to 1.

The multinomial distribution probabilities are estimated from experience counts. The table of counts forms a dirichlet posterior over the parameters of the multinomials. When the counts are reset to 1, there is a flat prior over multinomial parameters, and all outcomes are seen as equally likely. This is a particularly bad model, and will make it impossible to figure out the location of the face, so only call resetCounts if you're then going to call updateProbTableCounts for enough images to build up a good model.

| void MIPOMDP::saveToFile | ( | const char * | filename ) |

Save MIPOMDP Data: Save data about the structure of the MIPOMDP so that it can be persist past the current run of the program. This includes details about the structure of the IPP, the object detector used, the parameters of the multionmial observation model, the policy used. It does not include details about the state of the MIPOMDP (the current location of the target), but rather the properties of the model.

- Parameters:

-

filename The name of the saved file.

| void MIPOMDP::saveVisualization | ( | IplImage * | grayFrame, |

| CvPoint | searchPoint, | ||

| const char * | base_filename | ||

| ) |

Interface with IPP: Save a variety of visual representations of the process of fixating with an IPP to image files.

This method saves a series of .png image files, each with a prefix given by base_filename. Images with the following suffix are created:

- FullInputImage - The full input image contained in grayFrame.

- Scale-[0:N] - The down-sampled representation of each image patch.

- FoveatedInputImage - A reconstruction of the full image using the donwsampled patches.

- FoveatedInputImageWithLooking - Same as above, with white boxes drawn around each scale.

- FoveatedInputImageWithGrid - Same as above, but with a grid overlayed showing the grid-cells.

- FullInputImageWithGrid - Full image with black rectangles showing grid-cell locations.

- FullInputImageWithLooking - Same as above, but with wite boxes drawn around each scale.

Additionally, one CSV file is created, suffix "FaceCounts.csv", which records the output of the object detector on the foveated representation in each grid-cell.

- Parameters:

-

grayFrame The image to search. This image should have size inputImageSize, and be of type IPL_DEPTH_8U with a single channel. searchPoint The center of fixation. base_filename All of the files generated by this function will be given this as a prefix.

| CvPoint MIPOMDP::searchFrameAtAllGridPoints | ( | IplImage * | grayFrame ) |

Search function: Search an image (or frame of video) at every grid-point (no early stopping is used).

Since this is taken to be a new frame, the IPP knows not to use same- frame optimizations on the first saccade, and then that it can use same-frame optimizations on subsequent fixations (if appropriate).

- Parameters:

-

grayFrame The image to search. Must be of type IPL_DEPTH_8U, 1 channel.

- Returns:

- The most likely location of the search target.

| CvPoint MIPOMDP::searchFrameAtGridPoint | ( | IplImage * | grayFrame, |

| CvPoint | searchPoint | ||

| ) | [virtual] |

Search function: Search an image (or frame of video) at a location recommended by the calling program, and update belief based on what was found.

Since this is taken to be a repeat of the last frame, the IPP knows that it can use same-frame optimizations (if appropriate).

- Parameters:

-

grayFrame The image to search. Must be of type IPL_DEPTH_8U, 1 channel. searchPoint The grid location to center the digital fovea for further image processing.

- Returns:

- The most likely location of the search target.

| CvPoint MIPOMDP::searchFrameForNFixations | ( | IplImage * | grayFrame, |

| int | numfixations | ||

| ) |

Search function: Search an image (or frame of video) at a sequence of fixation points recommended by the CLP, and update belief based on what was found. Fixates the specified number of times (no early stopping is used).

Since this is taken to be a new frame, the IPP knows not to use same- frame optimizations on the first saccade, and then that it can use same-frame optimizations on subsequent fixations (if appropriate).

- Parameters:

-

grayFrame The image to search. Must be of type IPL_DEPTH_8U, 1 channel. numfixations The number of fixations to apply.

- Returns:

- The most likely location of the search target.

| CvPoint MIPOMDP::searchFrameForNFixationsAndVisualize | ( | IplImage * | grayFrame, |

| int | numfixations, | ||

| const char * | window, | ||

| int | msec_wait | ||

| ) |

Search function: Search an image (or frame of video) at a sequence of fixation points recommended by the CLP, and update belief based on what was found. Fixates the specified number of times (no early stopping is used). Visualize the process in a provided OpenCV UI Window, at a specified frame rate.

Since this is taken to be a new frame, the IPP knows not to use same- frame optimizations on the first saccade, and then that it can use same-frame optimizations on subsequent fixations (if appropriate).

- Parameters:

-

grayFrame The image to search. Must be of type IPL_DEPTH_8U, 1 channel. numfixations The number of fixations to apply. window The string handle (name) of an OpenCV UI Window that you have previously created. Output will displayed in this window. msec_wait Number of milliseconds to wait between fixations so that the process of each fixation can be appreciated visually by the user.

- Returns:

- The most likely location of the search target.

| CvPoint MIPOMDP::searchFrameForNFixationsOpenLoop | ( | IplImage * | grayFrame, |

| int | numfixations, | ||

| int | OLPolicyType | ||

| ) |

Search function: Search an image (or frame of video) at a sequence of fixation points recommended by an open loop fixation policy, and update belief based on what was found. Fixates the specified number of times (no early stopping is used).

Since this is taken to be a new frame, the IPP knows not to use same- frame optimizations on the first saccade, and then that it can use same-frame optimizations on subsequent fixations (if appropriate).

- Parameters:

-

grayFrame The image to search. Must be of type IPL_DEPTH_8U, 1 channel. numfixations The number of fixations to apply. OLPolicyType The type of open-loop policy to use. Should be one of OpenLoopPolicy::RANDOM, OpenLoopPolicy::ORDERED, OpenLoopPolicy::SPIRAL.

- Returns:

- The most likely location of the search target.

| CvPoint MIPOMDP::searchFrameRandomlyUntilConfident | ( | IplImage * | grayFrame, |

| double | confidenceThresh | ||

| ) |

Search function: Search an image (or frame of video) at a sequence of fixation points chosen randomly, and update belief based on what was found. Employs an early-stop criterion of the first repeat fixation. Otherwise, stops at when confidence in the target location reaches a maximum value.

Since this is taken to be a new frame, the IPP knows not to use same- frame optimizations on the first saccade, and then that it can use same-frame optimizations on subsequent fixations (if appropriate).

- Parameters:

-

grayFrame The image to search. Must be of type IPL_DEPTH_8U, 1 channel. confidenceThresh Probability that max-location really contains the object before stopping.

- Returns:

- The most likely location of the search target.

| CvPoint MIPOMDP::searchFrameUntilConfident | ( | IplImage * | grayFrame, |

| double | confidenceThresh | ||

| ) |

Search function: Search an image (or frame of video) at a sequence of fixation points recommended by the CLP, and update belief based on what was found. Employs an early-stop criterion of the first repeat fixation. Otherwise, stops at when confidence in the target location reaches a maximum value.

Since this is taken to be a new frame, the IPP knows not to use same- frame optimizations on the first saccade, and then that it can use same-frame optimizations on subsequent fixations (if appropriate).

- Parameters:

-

grayFrame The image to search. Must be of type IPL_DEPTH_8U, 1 channel. confidenceThresh Probability that max-location really contains the object before stopping.

- Returns:

- The most likely location of the search target.

| CvPoint MIPOMDP::searchHighResImage | ( | IplImage * | grayFrame ) |

Search function: Apply the Object Detector to the entire input image.

The belief map is not changed by this operation. The count vector of objects found in each grid-cell is set. The location of the grid-cell with the most found objects is returned. The fovea representation is set to contain the entire high-resolution image, overlaid with grid-cells.

| CvPoint MIPOMDP::searchNewFrame | ( | IplImage * | grayFrame ) |

Search function: Search an image (or frame of video) at a location recommended by the Convolutional Logistic Policy, and update belief based on what was found.

Since this is taken to be a new frame, the IPP knows not to use same- frame optimizations.

- Parameters:

-

grayFrame The image to search. Must be of type IPL_DEPTH_8U, 1 channel.

- Returns:

- The most likely location of the search target.

| CvPoint MIPOMDP::searchNewFrameAtGridPoint | ( | IplImage * | grayFrame, |

| CvPoint | searchPoint | ||

| ) |

Search function: Search an image (or frame of video) at a location decided by the calling program. and update belief based on what was found.

Since this is taken to be a new frame, the IPP knows not to use same- frame optimizations.

- Parameters:

-

grayFrame The image to search. Must be of type IPL_DEPTH_8U, 1 channel. searchPoint The grid location to center the digital fovea for further image processing.

- Returns:

- The most likely location of the search target.

| void MIPOMDP::setGeneratePreview | ( | int | flag ) |

Interface with IPP: Turns on/off the code that modifies foveaRepresentation to visualize the process of fixating.

- Parameters:

-

flag Set to 0 if visualization is not desired (more efficient) or to 1 if visualization is desired.

| void MIPOMDP::setHaarCascadeMinSize | ( | int | size ) |

Interface with Object Detector: Sets the minimum patch size at which the classifier searches for the object. By default, this is 0, meaning that the smallest size appropriate to XML file is used. In the case of the frontal face detector provided, this happens to be 20x20 pixels.

- Parameters:

-

size The width/height of the smallest patches to try to detect the object.

| void MIPOMDP::setHaarCascadeScaleFactor | ( | double | factor ) |

Interface with Object Detector: Sets the factor by which the image-patch-search-scale is increased. Should be greater than 1. By default, the scale factor is 1.1, meaning that faces are searched for at sizes that increase by 10%.

- Parameters:

-

factor The size-granularity of object search.

| void MIPOMDP::setHeuristicPolicyParameters | ( | double | softmaxGain, |

| double | boxSize | ||

| ) |

Interface with CLP: Tell the CLP the shape of the convolution kernel to use.

- Parameters:

-

softmaxGain Has the following effect for: - ConvolutionalLogisticPolicy::BOX - The kernel is a square with integral=softmaxGain.

- ConvolutionalLogisticPolicy::GAUSSIAN - The kernel is a Gaussian with integral=softmaxGain

- ConvolutionalLogisticPolicy::IMPULSE - The kernel is an impulse response with value softmaxGain

- ConvolutionalLogisticPolicy::MAX - No effect

boxSize Has the following effect for: - ConvolutionalLogisticPolicy::BOX - The kernel is a boxSize x boxSize grid-cell square.

- ConvolutionalLogisticPolicy::GAUSSIAN - The kernel is a Gaussian with standard-deviation boxSize grid-cells.

- ConvolutionalLogisticPolicy::IMPULSE - No effect

- ConvolutionalLogisticPolicy::MAX - No effect

| void MIPOMDP::setMinSize | ( | CvSize | minsize ) |

Interface with IPP: Set the minimum allowed subImageSize.

If the subImageSize is too small (below getMinSize()), the smallest scale is dropped and subImageSize is scaled up proportionally to the next scale. This process is repeated until subImageSIze is greater than getMinSize(). By default, minSize is 60x40.

The will have no effect on the current subImageSize, or getUsedScales() until changeInputImageSize() is called.

- Parameters:

-

minsize The minimum allowed subImageSize.

| void MIPOMDP::setObjectDetectorSource | ( | std::string | newFileName ) |

Change the file used by the object detector for doing detecting. This is critical if a weights file is located at an absolute path that may have changed from training time.

When an ObjectDetector is loaded from disk, it will try to reload its weights file from the same source used in training. If this fails, a warning will be printed, and the detector's source will need to be set.

| void MIPOMDP::setPolicy | ( | int | policyNumber ) |

Interface with CLP: Tell the CLP what kind of convolution policy to use in the future.

- Parameters:

-

policyNumber Must be one of:

| void MIPOMDP::setTargetCanMove | ( | int | flag ) |

Interface with MIPOMDP: Tell MIPOMDP whether there is a possiblity that the target can move. When searching a static frame, set this to 0. When searching sequential frames of a movie, set this to 1.

By default, a simple dynamical model is assumed. The object is expected to move with brownian motion, with a small probability of jumping randomly anywhere in the image.

NOTE: If a frame is searched with one of the search functions that performs multiple fixations, dynamics are automatically, temporarily disabled. At the end of the function call, the targetCanMove is restored to its former state.

- Parameters:

-

flag Set to 1 (default) if searching sequential frames of a movie. Set to 0 if searching a static image.

| void MIPOMDP::trainObservationModel | ( | ImageDataSet * | trainingSet ) |

Interface with Observation Model: Fit a multinomial observation model by recording the object detector output at different fixation points, given known face locations contained in an ImageDataSet.

Resets the current observation model to a uniform prior before tabulating the data.

- Parameters:

-

trainingSet An iamge dataset, consisting of image files and labeled object locations. The first two indices (0, 1) of all labels in the trainingSet should be the width and height (respectively) of the object's center in the image.

| void MIPOMDP::useSameFrameOptimizations | ( | int | flag ) |

Interface with IPP: Set whether same-frame optimizations are being used.

Under certain conditions, the computation needed to search a frame a second time are less than the computations needed to search it a first time. In these conditions, the same-frame optimizations will automatically be used. However, this requires setting setNewImage() each time the image to search changes (i.e. a new frame). If you are in a situation in which you know that each frame will only be fixated at one point, you may wish to turn same-frame optimizations off.

Generally same-frame optimizations should not be turned on unless you know that they were turned on automatically. Turning them on when inappropriate will lead to incorrect behavior. In general, it is appropriate to turn them on if the first scale (largest scale) in the IPP is the same size as entire visual field.

- Parameters:

-

flag If 0, Same Frame Optimizations will not be used. If 1, Same Frame Optimizations will be used regardless of whether or not it's appropriate. Be careful setting this to 1.

Member Data Documentation

| IplImage* MIPOMDP::currentBelief |

Interface with MIPOMDP State: Access the current belief distribution directly. Changes each time one of the search functions is called.

This is representd as an image of type IPL_DEPTH_32F, 1 channle. This is so that the belief-distribution can be visualized directly in a GUI.

| IplImage* MIPOMDP::foveaRepresentation |

Interface with MIPOMDP State: A visually informative representation of the IPP Foveal represention.

This is meant as an image that is appropriate for display in a GUI, to visualize the algorithm in action. The image has the same size as inputImageSize. In order to increase efficiency, generation of this visualization should be disabled if it is not going to be accessed. This can be achieved by calling setGeneratePreview(0).

The documentation for this class was generated from the following files:

- /Users/nick/projects/NickThesis/Code/OpenCV/src/MIPOMDP.h

- /Users/nick/projects/NickThesis/Code/OpenCV/src/MIPOMDP.cpp

1.7.2

1.7.2