Auxilliary Tool: The main data structure for the MIPOMDP algorithm. It is reponsible for generating the MIPOMDP observation vector. The ImagePatchPyramid (IPP) is described in detail in Butko & Movellan 2009 (see Related Publications).

More...

#include <ImagePatchPyramid.h>

Inherited by MOImagePatchPyramid.

Public Member Functions | |

| ImagePatchPyramid (CvSize inputImageSize, CvSize subImageSize, CvSize gridSize, int numSubImages, CvMat *subImageGridPoints, OpenCVHaarDetector *detector) | |

| Main Constructor: Manually create an IPP. | |

| ImagePatchPyramid () | |

| Placeholder Constructor. | |

| ImagePatchPyramid (ImagePatchPyramid *ippToCopy) | |

| Deep Copy Constructor: Create an IPP that is identical to the one copied. | |

| virtual | ~ImagePatchPyramid () |

| Default Destructor. | |

| virtual void | searchFrameAtGridPoint (IplImage *grayFrame, CvPoint searchPoint) |

| The main method for generating an observation vector: given an image, generate a count of object-detector firings in each grid-cell based on a fixation point. After this method is called, the resulting observation is stored in the objectCount element. | |

| virtual CvPoint | searchHighResImage (IplImage *grayFrame) |

| Apply the object detector to the entire image. This is used for comparing the speed and accuracy of the foveated search strategy. After this method is called, the count of objects that the object detector found in each grid-cell in the high resolution image is stored in objectCount. The location of the object is inferred as being the grid-cell with the highest count. | |

| int | getNumScales () |

| The total number of levels that the IP Pyramid has. | |

| int | getUsedScales () |

| The total number of levels that the IP Pyramid is currently using. | |

| int | getGridCellHeightOfScale (int i) |

| The height (measured in grid-cells) of the ith largest scale, indexed from 0. | |

| int | getGridCellWidthOfScale (int i) |

| The width (measured in grid-cells) of the ith largest scale, indexed from 0. | |

| CvRect | getVisibleRegion (CvPoint searchPoint) |

| Find the region of the belief map that is visible in any scales when fixating a grid-point. | |

| CvSize | getInputImageSize () |

| The expected size of the next input image. | |

| CvSize | getSubImageSize () |

| The common reference size that image patches are down-scaled to. | |

| CvSize | getMinSize () |

| Get the minimum allowed subImageSize. | |

| CvPoint | gridPointForPixel (CvPoint pixel) |

| Map a pixel location in the original image into a grid-cell. | |

| CvPoint | pixelForGridPoint (CvPoint gridPoint) |

| Find the pixel in the original image that is in the center of a grid-cell. | |

| int | getGeneratePreview () |

| Find out if the foveaRepresentation preview image is being set after every fixation. This may be turned off in order to improve efficiency. | |

| int | getSameFrameOptimizations () |

| Check whether same-frame optimizations are being used. | |

| void | changeInputImageSize (CvSize newInputSize) |

| Change the size of the input image and the downsampled image patches. Omitting a newSubImageSize causes the smallest-used-scale to have a 1-1 pixel mapping with the downsampled image patch -- i.e. information is not lost in the smallest scale. | |

| void | changeInputImageSize (CvSize newInputSize, CvSize newSubImageSize) |

| Change the size of the input image and the downsampled image patches. | |

| void | setGeneratePreview (int flag) |

| Turns on/off the code that modifies foveaRepresentation to visualize the process of fixating. | |

| void | setMinSize (CvSize minsize) |

| Set the minimum allowed subImageSize. | |

| void | setNewImage () |

| Tell the IPP not to use the next-frame optimizations for the next frame. | |

| void | useSameFrameOptimizations (int flag) |

| Set whether same-frame optimizations are being used. | |

| void | setObjectDetectorSource (std::string newFileName) |

| Change the file used by the object detector for doing detecting. This is critical if a weights file is located at an absolute path that may have changed from training time. | |

| void | saveVisualization (IplImage *grayFrame, CvPoint searchPoint, const char *base_filename) |

| Save a variety of visual representations of the process of fixating with an IPP to image files. | |

Public Attributes | |

| IplImage * | objectCount |

| Number of times the object-detector fired in each grid-cell suring the last call to searchFrameAtGridPoint() or searchHighResImage(). | |

| IplImage * | foveaRepresentation |

| A visually informative representation of the IPP Foveal represention. | |

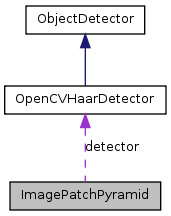

| OpenCVHaarDetector * | detector |

| A pointer to the oject detector used in searching. By exposing this variable, it is easy to switch out the object detector that is applied to an IPP, or to change its properties. | |

Friends | |

| std::ostream & | operator<< (std::ostream &ofs, ImagePatchPyramid *a) |

| Write to a file. | |

| std::istream & | operator>> (std::istream &ifs, ImagePatchPyramid *a) |

| Read from a file. | |

Detailed Description

Auxilliary Tool: The main data structure for the MIPOMDP algorithm. It is reponsible for generating the MIPOMDP observation vector. The ImagePatchPyramid (IPP) is described in detail in Butko & Movellan 2009 (see Related Publications).

The IPP data structure divides an image up into grid-cells. It then extracts image patches comprising different numbers of grid-cells, and scales them down to a common size, and searches each patch for the target object. Any objects that are found in any grid-cell in any patch are added to a count-vector element corresponding to that grid-cell. This count-vector forms the bases for the MIPOMDP Observation.

In order to perform the above behavior, an IPP needs to know how big the images it receives are, to what smaller size it should scale each image patch, the size (height/width) of the grid-cell tiling of the image, how many image patches there will be, the size (height/width) in grid-cells of each image patch, and what object detector to apply.

- Date:

- 2010

- Version:

- 0.4

Constructor & Destructor Documentation

| ImagePatchPyramid::ImagePatchPyramid | ( | CvSize | inputImageSize, |

| CvSize | subImageSize, | ||

| CvSize | gridSize, | ||

| int | numSubImages, | ||

| CvMat * | subImageGridPoints, | ||

| OpenCVHaarDetector * | detector | ||

| ) |

Main Constructor: Manually create an IPP.

An IPP needs to know how big the images it receives are, to what smaller size it should scale each image patch, the size (height/width) of the grid-cell tiling of the image, how many image patches there will be, the size (height/width) in grid-cells of each image patch, and what object detector to apply.

- Parameters:

-

inputImageSize The size of the images that will be given to the IPP to turn into MIPOMDP observations. This allocates memory for underlying data, but it can be changed easily later if needed without recreating the object by calling the changeInputSize() functions. subImageSize The common size to which all image patches will be reduced, creating the foveation effect. The smaller subImageSize is, the faster search is, and the more extreme the effect of foveation. This allocates memory for underlying data, but it can be changed easily later if needed without recreating the object by calling the changeInputSize() functions. gridSize Size of the discretization of the image. The number of POMDP states is the product of the demensions of this size (e.g. 21x21). numSubImages Number of Patches in the Image Patch Pyramid. subImageGridPoints A matrix that describes the size and shape of each level (patch) of the IP Pyramid. This must be a matrix with size [numSubImages x 2]. Each row contains the width and height of the corresponding levels. These should be in order of *decreasing* size, so that the largest Image Patch is first. For example, in Butko and Movellan CVPR 2009, we used [21 21; 15 15; 9 9; 3 3]. Finaly, note that it is not necessary that the largest patch cover the entire image. However, when the largest patch is the same size as grid-cell-matrix, special optimizations become available that reduce the complexity of the algorithm when the same image, or same frame of video, is fixated multiple times. detector A pointer to an object detector. This object detector will be applied to each patch in the IPP, forming the basis for the MIPOMDP observation model.

| ImagePatchPyramid::ImagePatchPyramid | ( | ) |

Placeholder Constructor.

Used to create a placeholder for an IPP, which can then be read from a file stream using the >> operator. Typical usage for this constructor is:

ipp = new ImagePatchPyramid();

in >> ipp;

| ImagePatchPyramid::~ImagePatchPyramid | ( | ) | [virtual] |

Default Destructor.

Deallocates all memory associated with the IPP.

Member Function Documentation

| void ImagePatchPyramid::changeInputImageSize | ( | CvSize | newInputSize, |

| CvSize | newSubImageSize | ||

| ) |

Change the size of the input image and the downsampled image patches.

- Parameters:

-

newInputSize The size of the next image that will be searched. newSubImageSize The desired size of the downsampled image patches. If the subImageSize is too small (below getMinSize()), the smallest scale is dropped and subImageSize is scaled up proportionally to the next scale. This process is repeated until subImageSIze is greater than getMinSize(). By default, minSize is 60x40.

| void ImagePatchPyramid::changeInputImageSize | ( | CvSize | newInputSize ) |

Change the size of the input image and the downsampled image patches. Omitting a newSubImageSize causes the smallest-used-scale to have a 1-1 pixel mapping with the downsampled image patch -- i.e. information is not lost in the smallest scale.

- Parameters:

-

newInputSize The size of the next image that will be searched.

| CvSize ImagePatchPyramid::getMinSize | ( | ) |

Get the minimum allowed subImageSize.

If the subImageSize is too small (below getMinSize()), the smallest scale is dropped and subImageSize is scaled up proportionally to the next scale. This process is repeated until subImageSIze is greater than getMinSize(). By default, minSize is 60x40.

| int ImagePatchPyramid::getNumScales | ( | ) |

The total number of levels that the IP Pyramid has.

Note that this may be different from the number of scales that the IP Pyramid is *using*. If the subImageSize is too small (below getMinSize()), the smallest scale is dropped and subImageSize is scaled up proportionally to the next scale. This process is repeated until subImageSIze is greater than getMinSize(). To find out how many scales that the IPP is using, call getUsedScales().

| int ImagePatchPyramid::getSameFrameOptimizations | ( | ) |

Check whether same-frame optimizations are being used.

Under certain conditions, the computation needed to search a frame a second time are less than the computations needed to search it a first time. In these conditions, the same-frame optimizations will automatically be used. However, this requires setting setNewImage() each time the image to search changes (i.e. a new frame). If you are in a situation in which you know that each frame will only be fixated at one point, you may wish to turn same-frame optimizations off.

| CvSize ImagePatchPyramid::getSubImageSize | ( | ) |

The common reference size that image patches are down-scaled to.

This is not necessarily the value set in the constructor, or in changeInputImageSize(). If the requested subImageSize is too small (below getMinSize()), the smallest scale is dropped and subImageSize is scaled up proportionally to the next scale. This process is repeated until subImageSIze is greater than getMinSize().

| int ImagePatchPyramid::getUsedScales | ( | ) |

The total number of levels that the IP Pyramid is currently using.

Note that this may be different from the number of scales that the IP Pyramid has. If the subImageSize is too small (below getMinSize()), the smallest scale is dropped and subImageSize is scaled up proportionally to the next scale. This process is repeated until subImageSIze is greater than getMinSize(). To find out how many scales that the IPP is has, call getNumScales().

| CvRect ImagePatchPyramid::getVisibleRegion | ( | CvPoint | searchPoint ) |

Find the region of the belief map that is visible in any scales when fixating a grid-point.

NOTE: We assume that all patches are concentric, and that the largest image patch comes first.

- Parameters:

-

searchPoint The grid-cell center of fixation.

| void ImagePatchPyramid::saveVisualization | ( | IplImage * | grayFrame, |

| CvPoint | searchPoint, | ||

| const char * | base_filename | ||

| ) |

Save a variety of visual representations of the process of fixating with an IPP to image files.

This method saves a series of .png image files, each with a prefix given by base_filename. Images with the following suffix are created:

- FullInputImage - The full input image contained in grayFrame.

- Scale-[0:N] - The down-sampled representation of each image patch.

- FoveatedInputImage - A reconstruction of the full image using the donwsampled patches.

- FoveatedInputImageWithLooking - Same as above, with white boxes drawn around each scale.

- FoveatedInputImageWithGrid - Same as above, but with a grid overlayed showing the grid-cells.

- FullInputImageWithGrid - Full image with black rectangles showing grid-cell locations.

- FullInputImageWithLooking - Same as above, but with wite boxes drawn around each scale.

Additionally, one CSV file is created, suffix "FaceCounts.csv", which records the output of the object detector on the foveated representation in each grid-cell.

- Parameters:

-

grayFrame The image to search. This image should have size inputImageSize, and be of type IPL_DEPTH_8U with a single channel. searchPoint The center of fixation. base_filename All of the files generated by this function will be given this as a prefix.

| void ImagePatchPyramid::searchFrameAtGridPoint | ( | IplImage * | grayFrame, |

| CvPoint | searchPoint | ||

| ) | [virtual] |

The main method for generating an observation vector: given an image, generate a count of object-detector firings in each grid-cell based on a fixation point. After this method is called, the resulting observation is stored in the objectCount element.

- Parameters:

-

grayFrame The image to search. This image should have size inputImageSize, and be of type IPL_DEPTH_8U with a single channel. searchPoint The grid-cell center of fixation.

| CvPoint ImagePatchPyramid::searchHighResImage | ( | IplImage * | grayFrame ) | [virtual] |

Apply the object detector to the entire image. This is used for comparing the speed and accuracy of the foveated search strategy. After this method is called, the count of objects that the object detector found in each grid-cell in the high resolution image is stored in objectCount. The location of the object is inferred as being the grid-cell with the highest count.

- Parameters:

-

grayFrame The image to search. This image should have size inputImageSize, and be of type IPL_DEPTH_8U with a single channel.

- Returns:

- The grid-cell location with the highest count.

| void ImagePatchPyramid::setGeneratePreview | ( | int | flag ) |

Turns on/off the code that modifies foveaRepresentation to visualize the process of fixating.

- Parameters:

-

flag Set to 0 if visualization is not desired (more efficient) or to 1 if visualization is desired.

| void ImagePatchPyramid::setMinSize | ( | CvSize | minsize ) |

Set the minimum allowed subImageSize.

If the subImageSize is too small (below getMinSize()), the smallest scale is dropped and subImageSize is scaled up proportionally to the next scale. This process is repeated until subImageSIze is greater than getMinSize(). By default, minSize is 60x40.

The will have no effect on the current subImageSize, or getUsedScales() until changeInputImageSize() is called.

- Parameters:

-

minsize The minimum allowed subImageSize.

| void ImagePatchPyramid::setNewImage | ( | ) |

Tell the IPP not to use the next-frame optimizations for the next frame.

Every time you are searching an image that is different from the one you searched before, this should be set (unless same-frame optimizations are turned off by the useSameFrameOptimizations() function).

When interfacing with the IPP via the MIPOMDP class, setNewImage() is always called for all search methods except searchFrameAtGridPoint().

| void ImagePatchPyramid::setObjectDetectorSource | ( | std::string | newFileName ) |

Change the file used by the object detector for doing detecting. This is critical if a weights file is located at an absolute path that may have changed from training time.

When an ObjectDetector is loaded from disk, it will try to reload its weights file from the same source used in training. If this fails, a warning will be printed, and the detector's source will need to be set.

| void ImagePatchPyramid::useSameFrameOptimizations | ( | int | flag ) |

Set whether same-frame optimizations are being used.

Under certain conditions, the computation needed to search a frame a second time are less than the computations needed to search it a first time. In these conditions, the same-frame optimizations will automatically be used. However, this requires setting setNewImage() each time the image to search changes (i.e. a new frame). If you are in a situation in which you know that each frame will only be fixated at one point, you may wish to turn same-frame optimizations off.

Generally same-frame optimizations should not be turned on unless you know that they were turned on automatically. Turning them on when inappropriate will lead to incorrect behavior. In general, it is appropriate to turn them on if the first scale (largest scale) in the IPP is the same size as entire visual field.

- Parameters:

-

flag If 0, Same Frame Optimizations will not be used. If 1, Same Frame Optimizations will be used regardless of whether or not it's appropriate. Be careful setting this to 1.

Member Data Documentation

A pointer to the oject detector used in searching. By exposing this variable, it is easy to switch out the object detector that is applied to an IPP, or to change its properties.

For example, with an OpenCVHaarDetector, the search granularity and minimum-patch-size parameters can be changed.

| IplImage* ImagePatchPyramid::foveaRepresentation |

A visually informative representation of the IPP Foveal represention.

This is meant as an image that is appropriate for display in a GUI, to visualize the algorithm in action. The image has the same size as inputImageSize. In order to increase efficiency, generation of this visualization should be disabled if it is not going to be accessed. This can be achieved by calling setGeneratePreview(0).

| IplImage* ImagePatchPyramid::objectCount |

Number of times the object-detector fired in each grid-cell suring the last call to searchFrameAtGridPoint() or searchHighResImage().

Has IplImage type IPL_DEPTH_8U, 1 channel.

The documentation for this class was generated from the following files:

- /Users/nick/projects/NickThesis/Code/OpenCV/src/ImagePatchPyramid.h

- /Users/nick/projects/NickThesis/Code/OpenCV/src/ImagePatchPyramid.cpp

1.7.2

1.7.2